PokaWindow

Turning Pokamind's raw AI emotion detection technology into a playful, trust-centered mental wellness experience, PokaWindow.

Contribution

Product strategy ✺ UX/UI Design ✺ Research ✺ MVP Launch

Team

1 UX designer (me) ✺ 1 project manager (founder) ✺ 1 ML/AI engineer (founder) ✺ 2 psychologists ✺ 2 full-stack engineers

Duration

4 months (Mar - June 2023)

Impact

Designed MVP that launched with 100% positive user feedback

Reframed AI product strategy to boost delight, trust & engagement

Bridged emotional AI output with playful, narrative UX

Overview

The founders of Pokamind had an advanced, multimodal generative AI technology that could detect emotions from facial expressions via video. But its initial MVP, built purely on founder intuition, suffered from low usability and unclear positioning.

I reframed and redesigned their first product, PokaWindow, into a playful, human-centered journaling experience. What changed? Everything from the AI’s voice to how users feel seen, not judged.

Challenge

How might we turn emotion-detecting AI into a warm, engaging self-care experience that builds trust and supports mental health?

My Role

- Redefined product vision into a playful, emotionally safe AI interaction

- Designed user flows that transformed emotion data into narrative feedback

- Led research, testing, and delivery of the new experience into MVP

User Insight

Our survey and user testing sessions from previous MVP showed that the act of recording themselves without context felt performative and uncomfortable. The raw emotion scores they received felt clinical, even judgmental.

"I don’t want AI to tell me how I feel, because I'm the one that knows the best. I want it to feel more like it understands me." - User A, 26

“It just felt weird talking to a camera with no idea what I’m supposed to say… and then seeing numbers about my feelings didn't say much back to me either” - User B, 22

Through synthesis, we realized the core issue wasn’t just usability. It was emotional trust. Users didn’t want to be analyzed. They wanted to be understood. They craved a sense of guidance, meaning, and emotional safety.

Design Approach

We reframed Pokawindow as an AI companion that gently encourages self-awareness through narrative, play, and emotional attunement. Instead of simply showing emotion stats and numbers, we wanted to offer a tool for users to explore their own minds with curiosity and fun. To do so, we focused on reframing our technology, humanizing it, and enhancing engagement.

- Reframe: From analytic tool → emotional reflection ritual

- Humanize: From data output → metaphorical story feedback

- Engage: From passive check-ins → playful daily habit

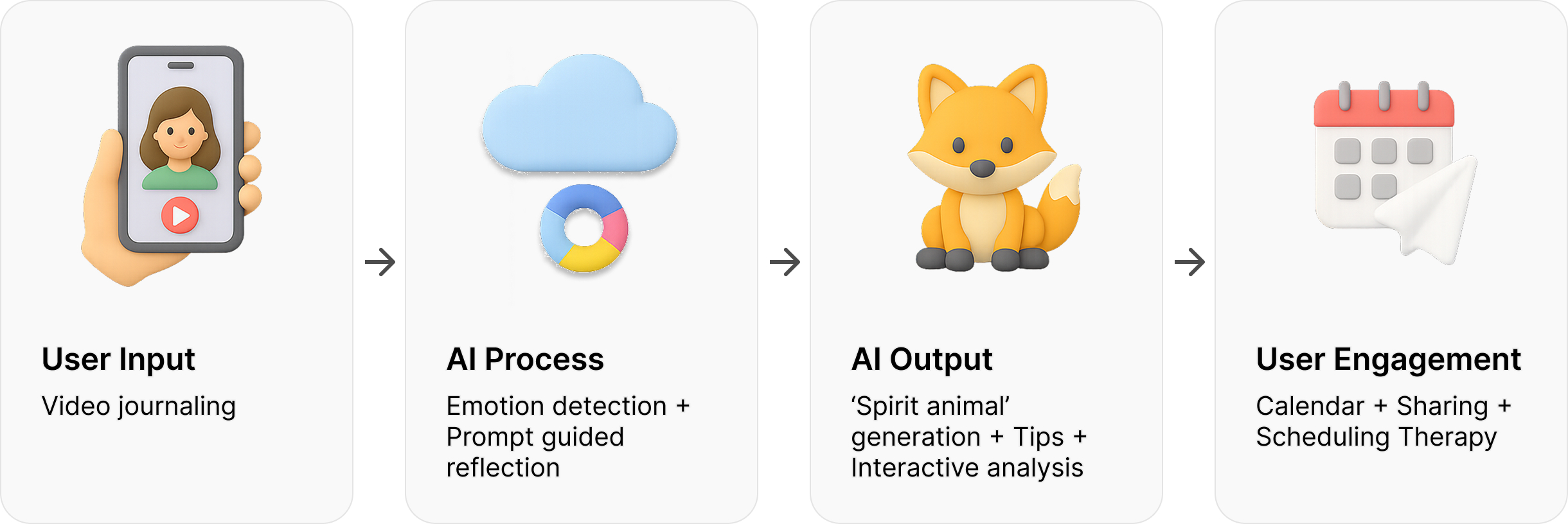

As a result, this is how we reimagined to build PokaWindow.

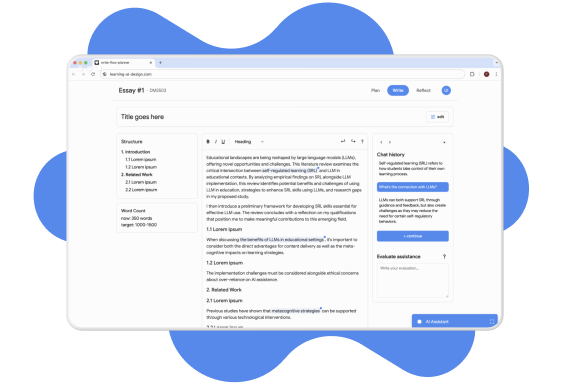

AI-UX Interaction flow of PokaWindow

Key Features

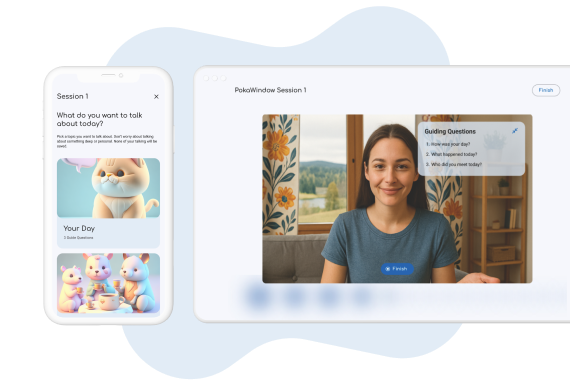

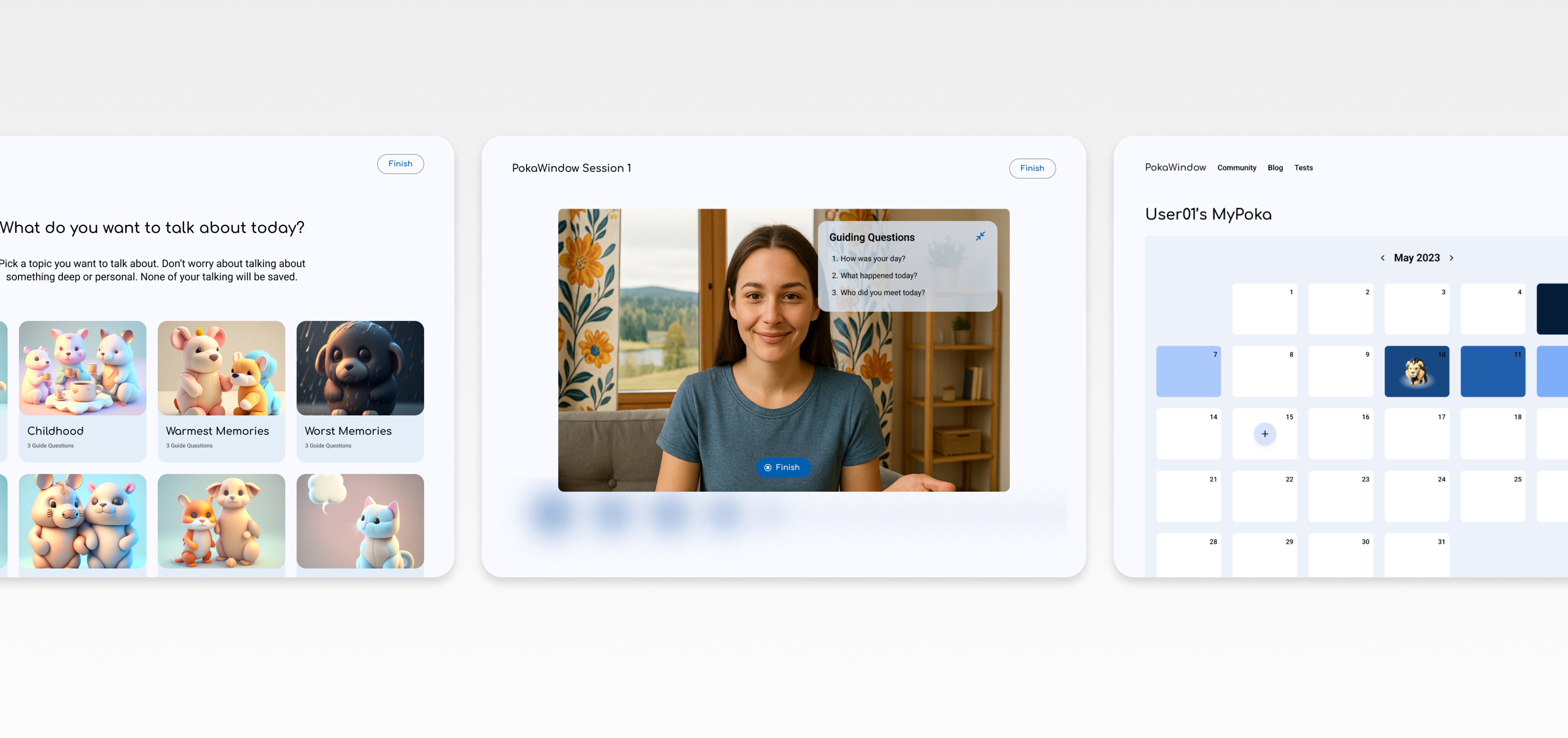

#1 Video Journaling

Users record daily reflections on the topic they choose, and can decide if they want to see an AI avatar with non-verbal response, themselves, or nothing.

During journaling, AI detects emotion and gently responds. Not with data, but cues and prompts that feel intuitive and personal.

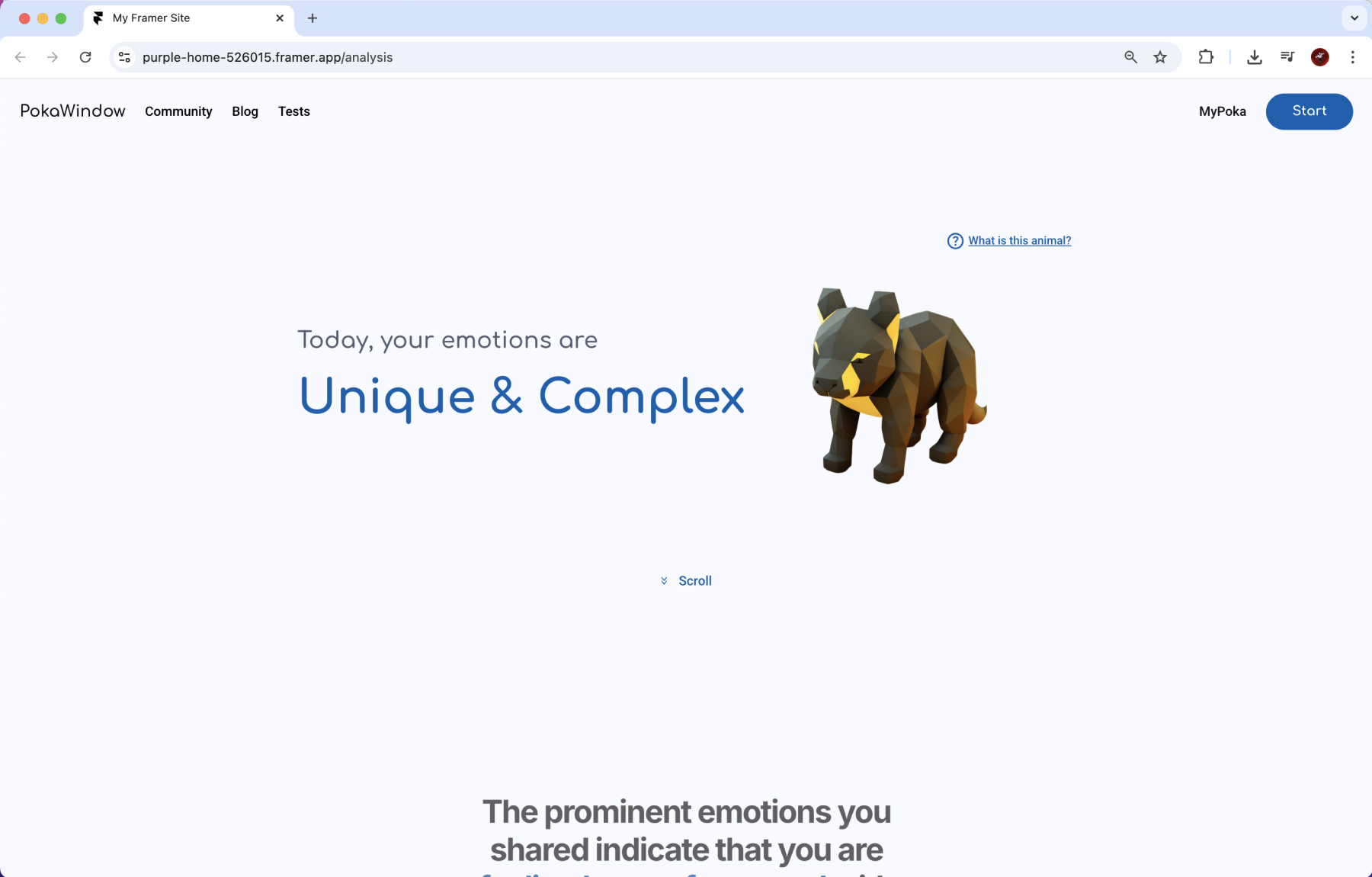

#2 Spirit Animal Feedback with Storytelling and Interactive Data

To balance trust and delight, we introduced the “Spirit Animal”, an AI-generated metaphor that reflects the user's mood in a playful yet meaningful way.

Each animal comes with a short narrative summary of the AI’s emotional analysis, followed by personalized suggestions. Rather than overwhelming users with raw emotion scores, we layered data behind a story-first approach.

For users who want to go deeper or share their emotional trends with a therapist, interactive visualizations surface the underlying data in a clear, approachable way. This enhances transparency while keeping the experience emotionally engaging and easy to use.

#3 My Poka - Mood Calendar

Each day’s spirit animal builds a colorful memory trail, which not only supports reflection, but also builds habit and emotional awareness over time.

On days when users can’t record video, they can quickly log their mood. This enables flexible engagement and continuity in data.

Result

I conducted 8 in-depth interviews and prototype (live website) testing sessions to validate emotional usability. I explored users’ psychological literacy, friction points, and how emotionally resonant the AI journaling flow felt.

The redesigned experience was met with overwhelmingly positive feedback:

- 100% of participants found it more intuitive and engaging than the previous MVP.

- Many described it as “surprisingly warm” and “something I’d want to use daily.”

- Most said it felt like “talking to a friend,” rather than being judged by AI.

- Over 70% said it would help them build a healthy habit of mental self-care.

On Comfort & Emotional Safety:

“I usually hate recording myself, but it was easy to talk with guiding questions and an avatar giving non-verbal response.” - User B, 22

“It felt like it understood how I was doing, without being clinical.” - User C, 24

On Delight & Engagement:

“I’d actually come back just to see my animal again.” - User E, 30

“It’s like it knew how I felt, and then made me smile.” - User D, 29

Reflection

This project pushed me to think beyond usability, and to design AI that feels emotionally intelligent, trustworthy, and genuinely helpful.

It taught me how to translate abstract emotional data into warm, playful interactions that users actually enjoy and return to.

As AI becomes more powerful, I believe UX must become more intentional, not just guiding behavior, but earning trust and building care into every interaction.